My answer to a Quora question: What percent chance is there that whole brain emulation or mind uploading to a neural prosthetic will be feasible within 35 years?

This opinion is unlikely to be popular among sci-fi fans, but I think the “chance” of mind uploading happening at any time in the future is zero. Or better yet, as a scientist I would assign no number at all to this subjective probability. [Also see the note on probability at the end.]

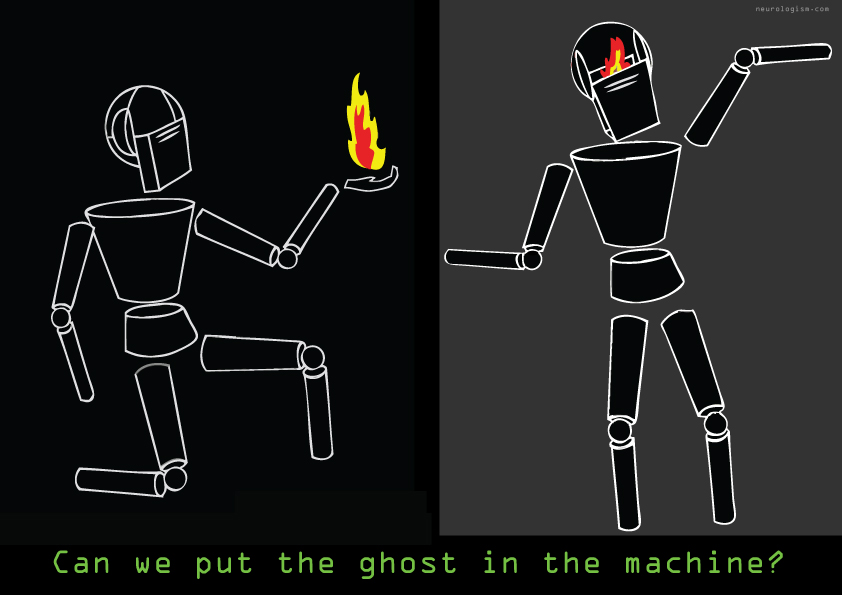

I think the concept is still incoherent from both philosophical and scientific perspectives.

In brief, these are the problems:

- We don’t know what the mind is from a scientific/technological perspective.

- We don’t know which processes in the brain (and body!) are essential to subjective mental experience.

- We don’t have any intuition for what “uploading” means in terms of mental unity and continuity.

- We have no way of knowing whether an upload has been successful.

You could of course take the position that clever scientists and engineers will figure it out while the silly philosophers debate semantics. But this I think is based on shaky analogies with other examples of scientific progress. You might justifiably ask “What are the chances of faster-than-light travel?” You could argue that our vehicles keep getting faster, so it’s only a matter of time before we have Star Trek style warp drives. But everything we know about physics says that crossing the speed of light is impossible. So the “chance” in this domain is zero. I think that the idea of uploading minds is even more problematic than faster-than-light travel, because the idea does not have any clear or widely accepted scientific meaning, let alone philosophical meaning. Faster-than-light travel is conceivable at least, but mind uploading may not even pass that test!

I’ll now discuss some of these issues in more detail.

The concept of uploading a mind is based on the assumption that mind and body are separate entities that can in principle exist without each other. There is currently no scientific proof of this idea. There is also no philosophical agreement about what the mind is. Mind-body dualism is actually quite controversial among scientists and philosophers these days.

People (including scientists) who make grand claims about mind uploading generally avoid the philosophical questions. They assume that if we have a good model of brain function, and a way to scan the brain in sufficient detail, then we have all the technology we need.

But this idea is full of unquestioned assumptions. Is the mind identical to a particular structural or dynamic pattern? And if software can emulate this pattern, does it mean that the software has a mind? Even if the program “says” it has a mind, should we believe it? It could be a philosophical zombie that lacks subjective experience.

Underlying the idea of mind/brain uploading is the notion of Multiple Realizability — the idea that minds are processes that can be realized in a variety of substrates. But is this true? It is still unclear what sort of process mind is. There are always properties of a real process that a simulation doesn’t possess. A computer simulation of water can reflect the properties of water (in the simulated ‘world’), but you wouldn’t be able to drink it! 🙂

Even if we had the technology for “perfect” brain scans (though it’s not clear what a “perfect” copy is), we run into another problem: we don’t understand what “uploading” entails. We run into the Ship of Theseus problem. In one variant of this problem/paradox we imagine that Theseus has a ship. He repairs it every once in a while, each time replacing one of the wooden boards. Unbeknownst to him, his rival has been keeping the boards he threw away, and over time he constructed an exact physical replica of Theseus’s ship. Now, which is the real ship of Theseus? His own ship, which is now physically distinct from the one he started with, or the (counterfeit?) copy, which is physically identical to the initial ship? There is no universally accepted answer to this question.

We can now explicitly connect this with the idea of uploading minds. Let’s say the mind is like the original (much repaired) ship of Theseus. Let’s say the computer copy of the brain’s structures and patterns is like the counterfeit ship. For some time there are two copies of the same mind/brain system — the original biological one, and the computer simulation. The very existence of two copies violates a basic notion most people have of the Self — that it must obey a kind of psychophysical unity. The idea that there can be two processes that are both “me” is incoherent (meaning neither wrong nor right). What would that feel like for the person whose mind had been copied?

Suppose in response to this thought experiment you say, “My simulated Self won’t be switched on until after I die, so I don’t have to worry about two Selves — unity is preserved.” In this case another basic notion is violated — continuity. Most people don’t think of the Self as something that can cease to exist and then start existing again. Our biological processes, including neural processes, are always active — even when we’re asleep or in a coma. What reason do we have to assume that when these activities cease, the Self can be recreated?

Let’s go even further: let’s suppose we have a great model of the mind, and a perfect scanner, and we have successfully run a simulated version of your mind on a computer. Does this simulation have a sense of Self? If you ask it, it might say yes. But is this enough? Even currently-existing simulations can be programmed to say “yes” to such questions. How can we be sure that the simulation really has subjective experience? And how can we be sure that it has your subjective experience? We might have just created a zombie simulation that has access to your memories, but cannot feel anything. Or we might have created a fresh new consciousness that isn’t yours at all! How do we know that a mind without your body will feel like you? [See the link on embodied cognition for more on this very interesting topic.]

And — perhaps most importantly — who on earth would be willing to test out the ‘beta versions’ of these techniques? 🙂

Let me end with a verse from William Blake’s poem “The Tyger“.

What the hammer? what the chain?

In what furnace was thy brain?

~

Further reading:

Will Brains Be Dowloaded? Of Course Not!

~

EDIT: I added this note to deal with some interesting issues to do with “chance” that came up in the Quora comments.

A note on probability and “chance”

Assigning a number to a single unique event such as the discovery of mind-uploading is actually problematic. What exactly does “chance” mean in such contexts? The meaning of probability is still being debated by statisticians, scientists and philosophers. For the purposes of this discussion, there are 2 basic notions of probability:

(1) Subjective degree of belief. We start with a statement A. The probability p(A) = 0 if I don’t believe A, and p(A) = 1 if I believe A. In other words, if your probability p(A) moves from 0 to 1, your subjective doubt decreases. If A is the statement “God exists” then an atheist’s p(A) is equal to 0, and a theist’s p(A) is 1.

(2) Frequency of a type of repeatable event. In this case the probability p(A) is the number of times event A happens, divided by the total number of events. Alternatively, it is the total number of outcomes that correspond to event A, divided by the total number of possible outcomes. For example, suppose statement A is “the die roll results in a 6”. There are 6 possible outcomes of a die roll, and one of them is 6. So p (A) = 1/6. In other words, if you roll an (ideal) die 600 times, you will see the side with 6 dots on it roughly 100 times.

Clearly, if statement A is “Mind uploading will be discovered in the future”, then we cannot use frequentist notions of probability. We do not have access to a large collection of universes from which to count the ones in which mind uploading has been successfully discovered, and then divide that number by the total number of universes. In other words, statement A does not refer to a statistical ensemble — it is a unique event. For frequentists, the probability of a unique event can only be 0 (hasn’t happened) or 1 (happened). And since mind uploading hasn’t happened yet, the frequency-based probability is 0.

So when a person asks about the “chance” of some unique future event, he or she is implicitly asking for a subjective degree of belief in the feasibility of this event. If you force me to answer the question, I’ll say that my subjective degree of belief in the possibility of mind uploading is zero. But I actually prefer not to assign any number, because I actually think the concept of mind uploading is incoherent (as opposed to unfeasible). The concept of its feasibility does not really arise (subjectively), because the idea of mind uploading is almost meaningless to me. Data can be uploaded and downloaded. But is the mind data? I don’t know one way or the other, so how can I believe in some future technology that presupposes that the mind is data?

More on probability theory:

Chances Are — NYT article on probabily by Steve Strogatz

Interpretations of Probability – Stanford Encyclopedia of Philosophy